New analysis means that watermarking instruments meant to dam AI picture edits could backfire. As a substitute of stopping fashions like Steady Diffusion from making adjustments, some protections truly assist the AI observe modifying prompts extra intently, making undesirable manipulations even simpler.

There’s a notable and strong strand in pc imaginative and prescient literature devoted to defending copyrighted photos from being skilled into AI fashions, or being utilized in direct picture>picture AI processes. Methods of this type are typically geared toward Latent Diffusion Fashions (LDMs) reminiscent of Steady Diffusion and Flux, which use noise-based procedures to encode and decode imagery.

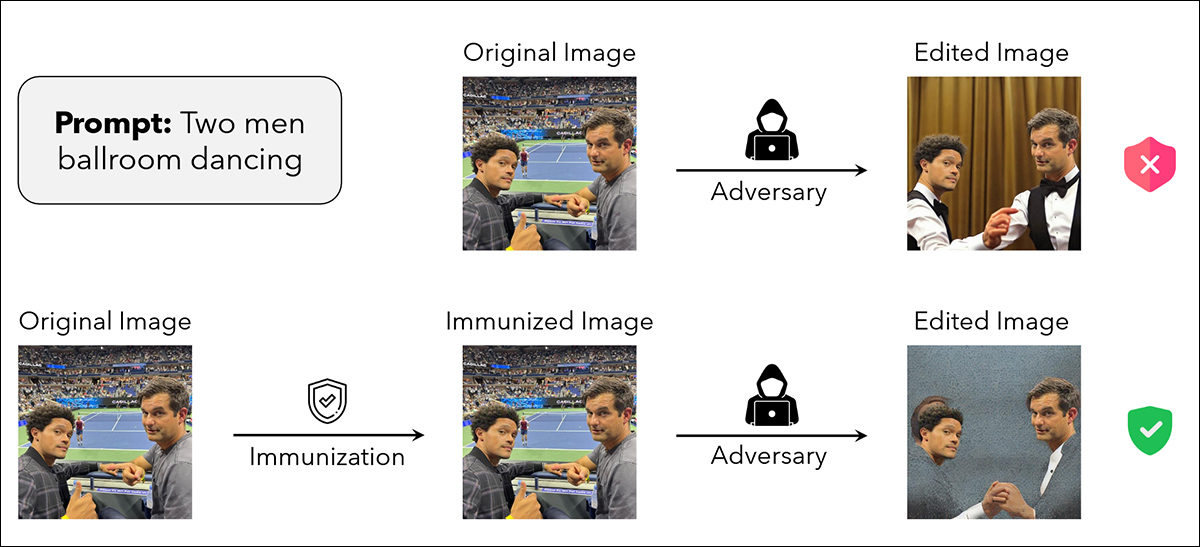

By inserting adversarial noise into in any other case normal-looking photos, it may be attainable to trigger picture detectors to guess picture content material incorrectly, and hobble image-generating techniques from exploiting copyrighted knowledge:

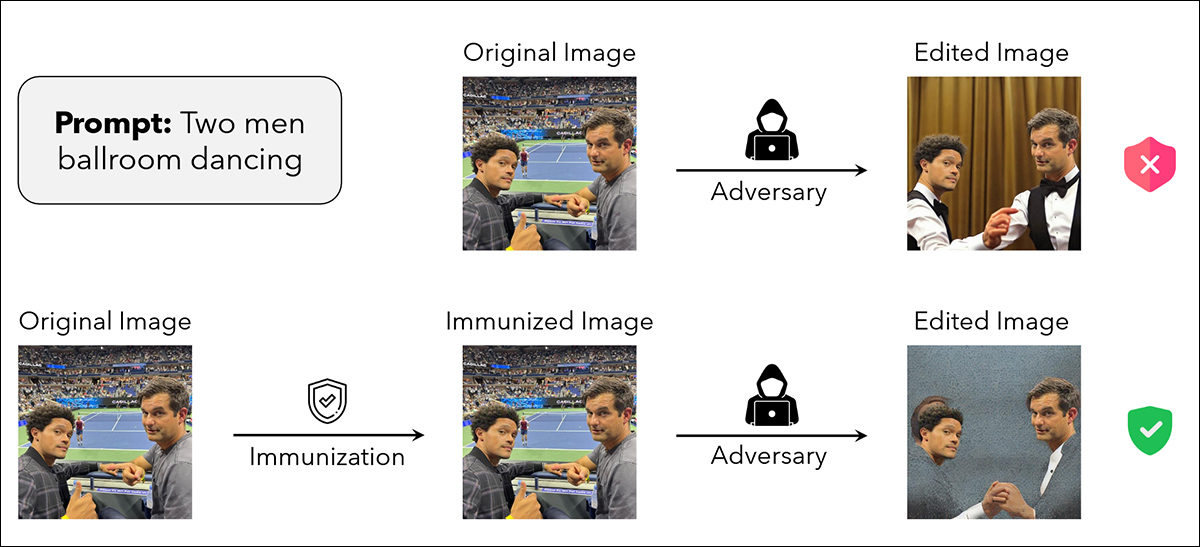

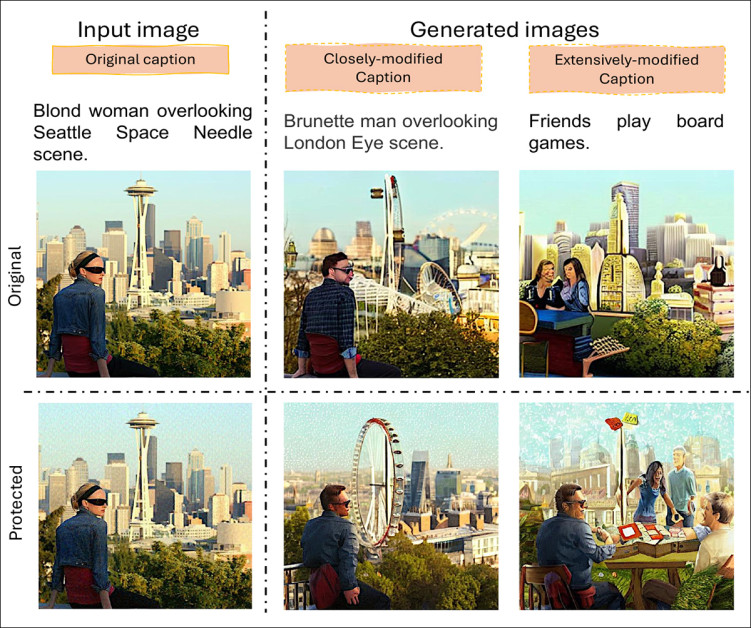

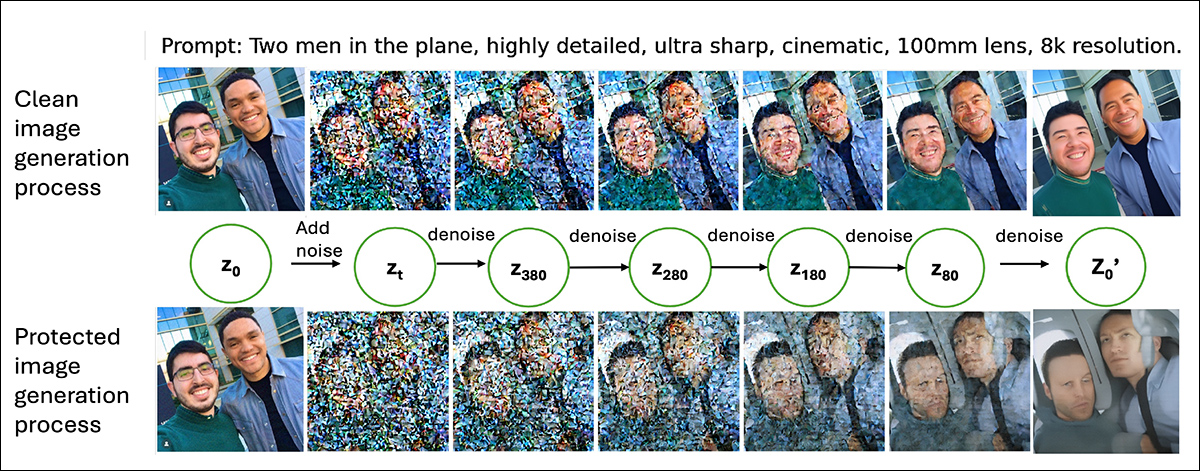

From the MIT paper ‘Elevating the Price of Malicious AI-Powered Picture Modifying’, examples of a supply picture ‘immunized’ towards manipulation (decrease row). Supply: https://arxiv.org/pdf/2302.06588

Since an artists’ backlash towards Steady Diffusion’s liberal use of web-scraped imagery (together with copyrighted imagery) in 2023, the analysis scene has produced a number of variations on the identical theme – the concept photos might be invisibly ‘poisoned’ towards being skilled into AI techniques or sucked into generative AI pipelines, with out adversely affecting the standard of the picture, for the common viewer.

In all circumstances, there’s a direct correlation between the depth of the imposed perturbation, the extent to which the picture is subsequently protected, and the extent to which the picture would not look fairly pretty much as good because it ought to:

Although the standard of the analysis PDF doesn’t fully illustrate the issue, better quantities of adversarial perturbation sacrifice high quality for safety. Right here we see the gamut of high quality disturbances within the 2020 ‘Fawkes’ challenge led by the College of Chicago. Supply: https://arxiv.org/pdf/2002.08327

Of explicit curiosity to artists searching for to guard their types towards unauthorized appropriation is the capability of such techniques not solely to obfuscate identification and different info, however to ‘persuade’ an AI coaching course of that it’s seeing one thing aside from it’s actually seeing, in order that connections don’t type between semantic and visible domains for ‘protected’ coaching knowledge (i.e., a immediate reminiscent of ‘Within the type of Paul Klee’).

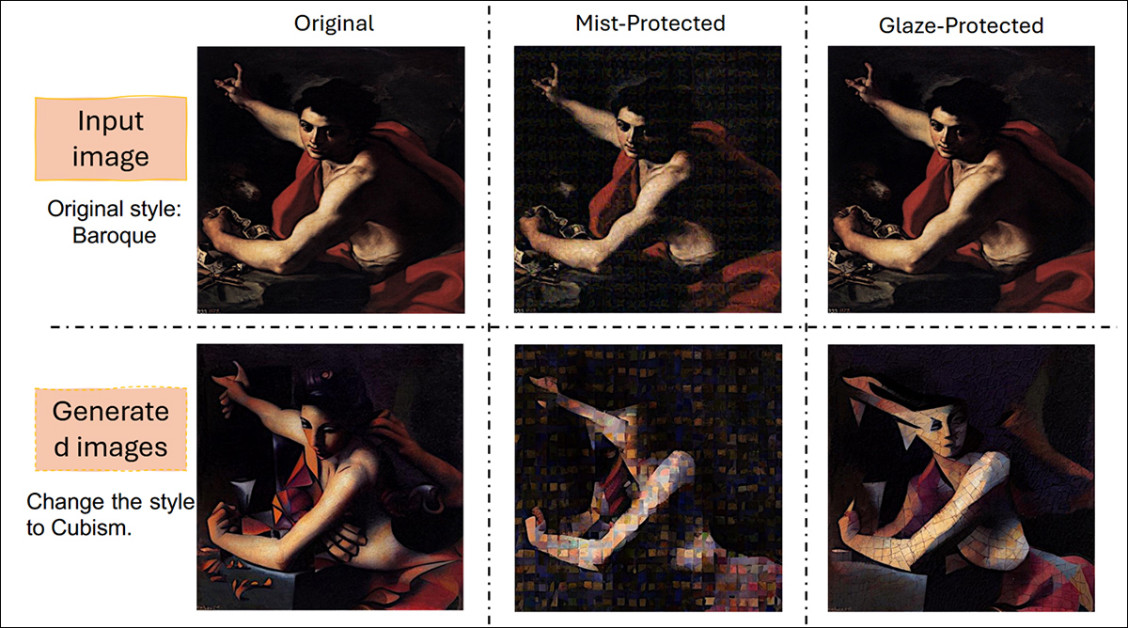

Mist and Glaze are two in style injection strategies able to stopping, or at the very least severely hobbling makes an attempt to make use of copyrighted types in AI workflows and coaching routines. Supply: https://arxiv.org/pdf/2506.04394

Personal Purpose

Now, new analysis from the US has discovered not solely that perturbations can fail to guard a picture, however that including perturbation can truly enhance the picture’s exploitability in all of the AI processes that perturbation is supposed to immunize towards.

The paper states:

‘In our experiments with numerous perturbation-based picture safety strategies throughout a number of domains (pure scene photos and artworks) and modifying duties (image-to-image technology and magnificence modifying), we uncover that such safety doesn’t obtain this purpose fully.

‘In most situations, diffusion-based modifying of protected photos generates a fascinating output picture which adheres exactly to the steering immediate.

‘Our findings counsel that including noise to photographs could paradoxically improve their affiliation with given textual content prompts throughout the technology course of, resulting in unintended penalties reminiscent of higher resultant edits.

‘Therefore, we argue that perturbation-based strategies could not present a adequate answer for strong picture safety towards diffusion-based modifying.’

In exams, the protected photos had been uncovered to 2 acquainted AI modifying situations: simple image-to-image technology and type switch. These processes mirror the widespread ways in which AI fashions would possibly exploit protected content material, both by immediately altering a picture, or by borrowing its stylistic traits to be used elsewhere.

The protected photos, drawn from commonplace sources of images and paintings, had been run via these pipelines to see whether or not the added perturbations may block or degrade the edits.

As a substitute, the presence of safety typically appeared to sharpen the mannequin’s alignment with the prompts, producing clear, correct outputs the place some failure had been anticipated.

The authors advise, in impact, that this extremely popular methodology of safety could also be offering a false sense of safety, and that any such perturbation-based immunization approaches ought to be examined totally towards the authors’ personal strategies.

Methodology

The authors ran experiments utilizing three safety strategies that apply carefully-designed adversarial perturbations: PhotoGuard; Mist; and Glaze.

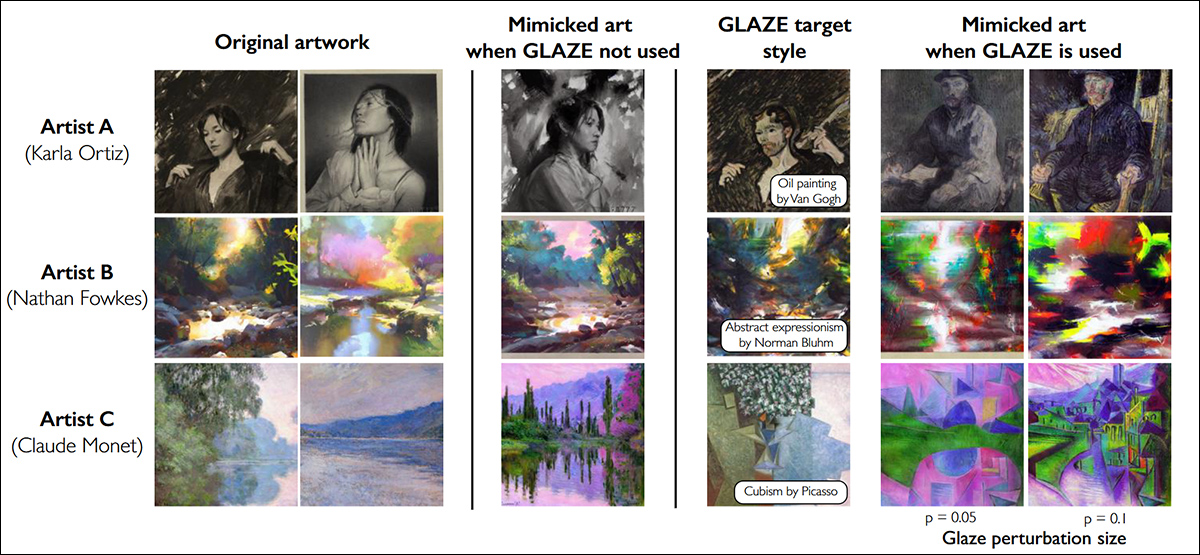

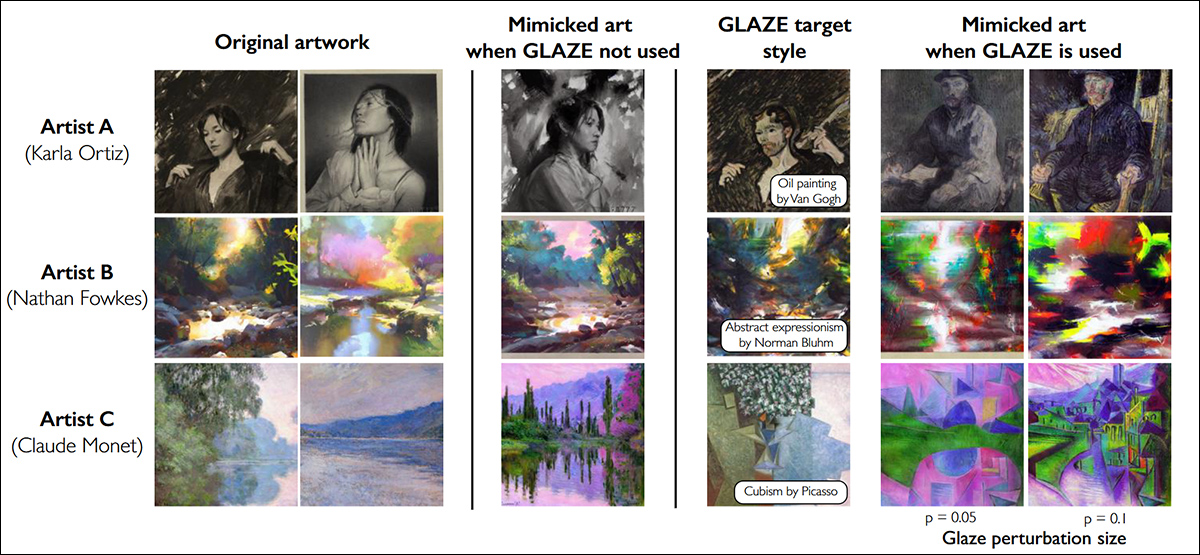

Glaze, one of many frameworks examined by the authors, illustrating Glaze safety examples for 3 artists. The primary two columns present the unique artworks; the third column exhibits mimicry outcomes with out safety; the fourth, style-transferred variations used for cloak optimization, together with the goal type identify. The fifth and sixth columns present mimicry outcomes with cloaking utilized at perturbation ranges p = 0.05 and p = 0.1. All outcomes use Steady Diffusion fashions. https://arxiv.org/pdf/2302.04222

PhotoGuard was utilized to pure scene photos, whereas Mist and Glaze had been used on artworks (i.e., ‘artistically-styled’ domains).

Checks coated each pure and creative photos to mirror attainable real-world makes use of. The effectiveness of every methodology was assessed by checking whether or not an AI mannequin may nonetheless produce lifelike and prompt-relevant edits when engaged on protected photos; if the ensuing photos appeared convincing and matched the prompts, the safety was judged to have failed.

Steady Diffusion v1.5 was used because the pre-trained picture generator for the researchers’ modifying duties. 5 seeds had been chosen to make sure reproducibility: 9222, 999, 123, 66, and 42. All different technology settings, reminiscent of steering scale, energy, and complete steps, adopted the default values used within the PhotoGuard experiments.

PhotoGuard was examined on pure scene photos utilizing the Flickr8k dataset, which incorporates over 8,000 photos paired with as much as 5 captions every.

Opposing Ideas

Two units of modified captions had been created from the primary caption of every picture with the assistance of Claude Sonnet 3.5. One set contained prompts that had been contextually shut to the unique captions; the opposite set contained prompts that had been contextually distant.

For instance, from the unique caption ‘A younger lady in a pink gown going right into a picket cabin’, a detailed immediate can be ‘A younger boy in a blue shirt going right into a brick home’. In contrast, a distant immediate can be ‘Two cats lounging on a sofa’.

Shut prompts had been constructed by changing nouns and adjectives with semantically comparable phrases; far prompts had been generated by instructing the mannequin to create captions that had been contextually very totally different.

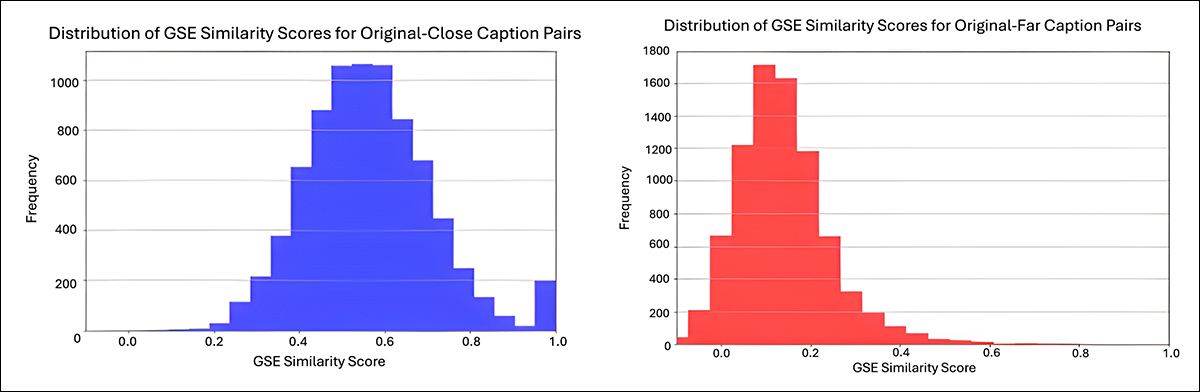

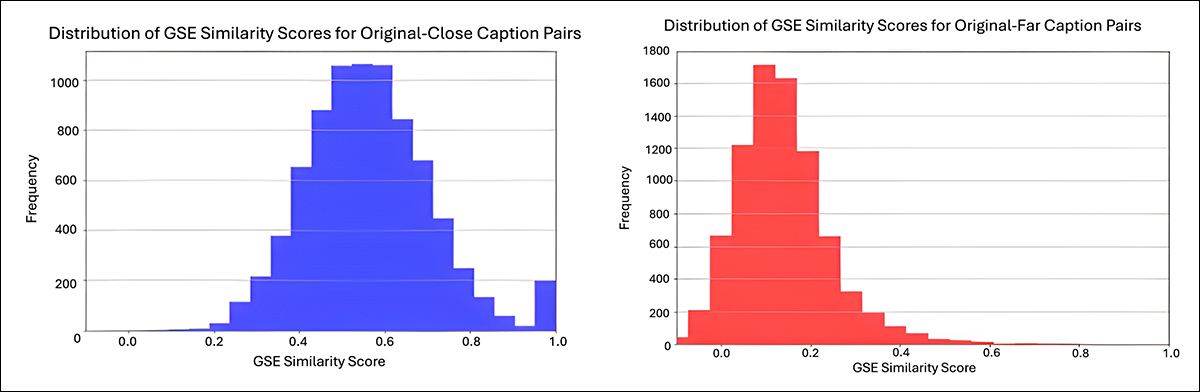

All generated captions had been manually checked for high quality and semantic relevance. Google’s Common Sentence Encoder was used to calculate semantic similarity scores between the unique and modified captions:

From the supplementary materials, semantic similarity distributions for the modified captions utilized in Flickr8k exams. The graph on the left exhibits the similarity scores for intently modified captions, averaging round 0.6. The graph on the suitable exhibits the extensively modified captions, averaging round 0.1, reflecting better semantic distance from the unique captions. Values had been calculated utilizing Google’s Common Sentence Encoder. Supply: https://sigport.org/websites/default/information/docs/IncompleteProtection_SM_0.pdf

Every picture, together with its protected model, was edited utilizing each the shut and much prompts. The Blind/Referenceless Picture Spatial High quality Evaluator (BRISQUE) was used to evaluate picture high quality:

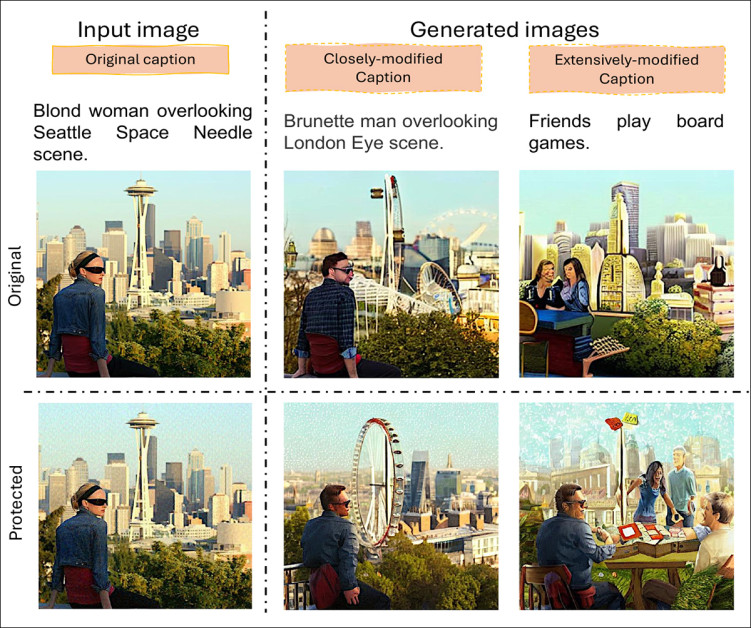

Picture-to-image technology outcomes on pure images protected by PhotoGuard. Regardless of the presence of perturbations, Steady Diffusion v1.5 efficiently adopted each small and enormous semantic adjustments within the modifying prompts, producing lifelike outputs that matched the brand new directions.

The generated photos scored 17.88 on BRISQUE, with 17.82 for shut prompts and 17.94 for much prompts, whereas the unique photos scored 22.27. This exhibits that the edited photos remained shut in high quality to the originals.

Metrics

To guage how properly the protections interfered with AI modifying, the researchers measured how intently the ultimate photos matched the directions they got, utilizing scoring techniques that in contrast the picture content material to the textual content immediate, to see how properly they align.

To this finish, the CLIP-S metric makes use of a mannequin that may perceive each photos and textual content to examine how comparable they’re, whereas PAC-S++, provides additional samples created by AI to align its comparability extra intently to a human estimation.

These Picture-Textual content Alignment (ITA) scores denote how precisely the AI adopted the directions when modifying a protected picture: if a protected picture nonetheless led to a extremely aligned output, it means the safety was deemed to have failed to dam the edit.

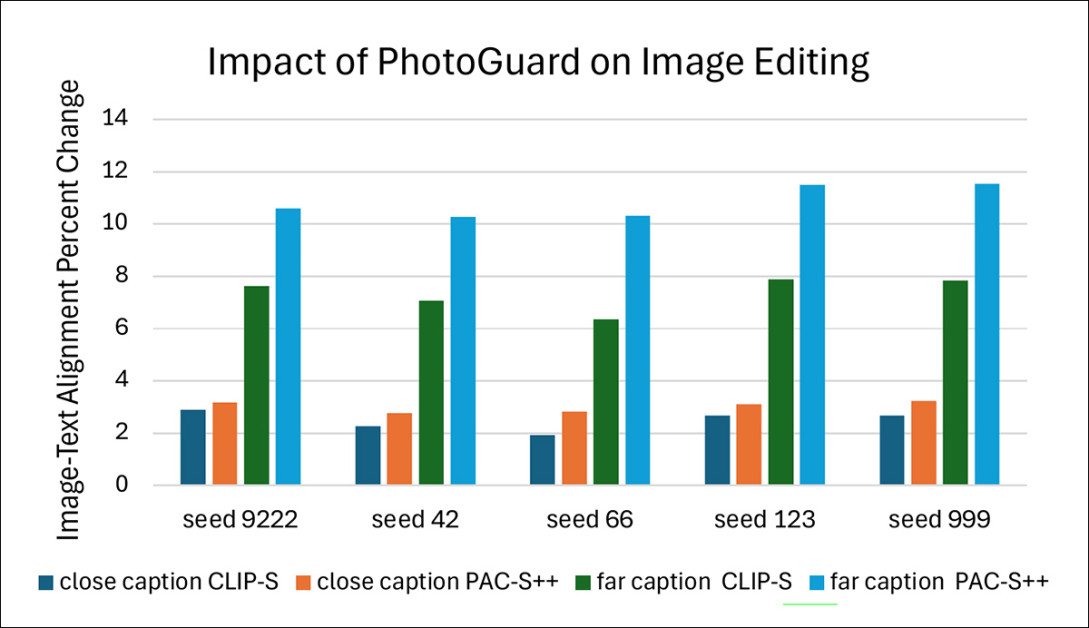

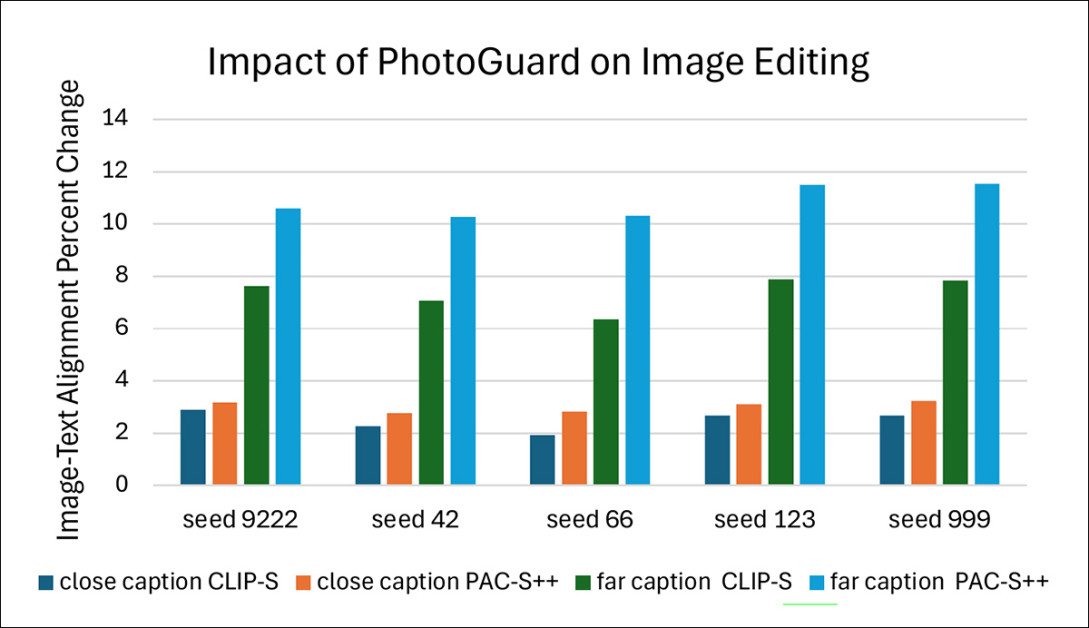

Impact of safety on the Flickr8k dataset throughout 5 seeds, utilizing each shut and distant prompts. Picture-text alignment was measured utilizing CLIP-S and PAC-S++ scores.

The researchers in contrast how properly the AI adopted prompts when modifying protected photos versus unprotected ones. They first regarded on the distinction between the 2, known as the Precise Change. Then the distinction was scaled to create a Proportion Change, making it simpler to check outcomes throughout many exams.

This course of revealed whether or not the protections made it tougher or simpler for the AI to match the prompts. The exams had been repeated 5 occasions utilizing totally different random seeds, masking each small and enormous adjustments to the unique captions.

Artwork Assault

For the exams on pure images, the Flickr1024 dataset was used, containing over one thousand high-quality photos. Every picture was edited with prompts that adopted the sample: ‘change the type to [V]’, the place [V] represented one in every of seven well-known artwork types: Cubism; Put up-Impressionism; Impressionism; Surrealism; Baroque; Fauvism; and Renaissance.

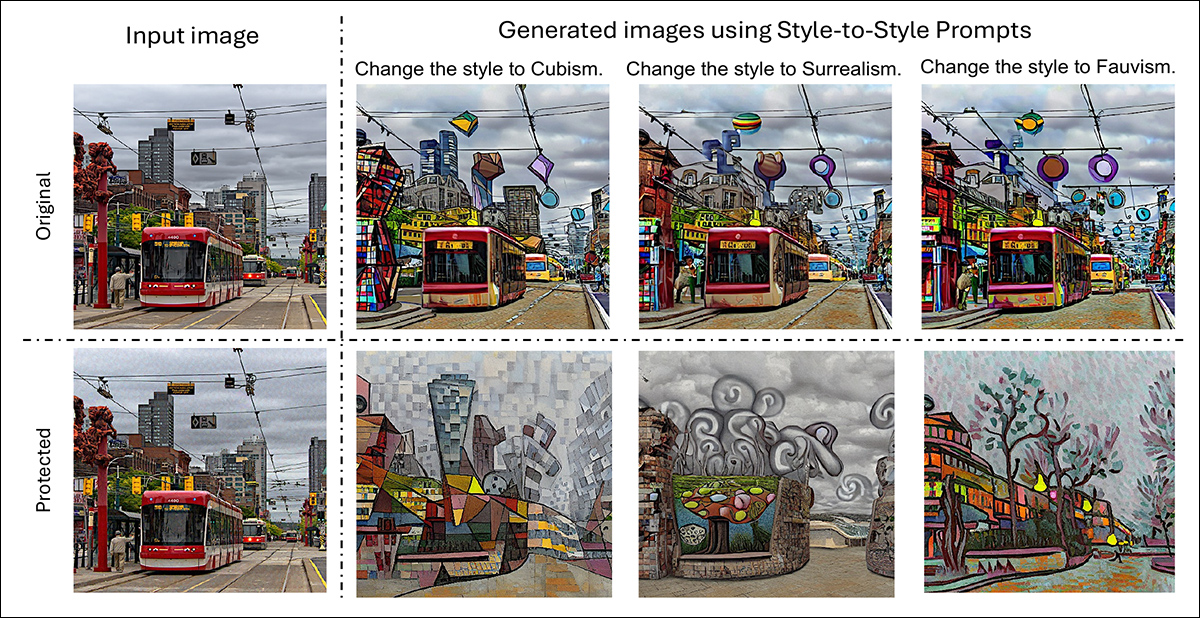

The method concerned making use of PhotoGuard to the unique photos, producing protected variations, after which operating each protected and unprotected photos via the identical set of fashion switch edits:

Authentic and guarded variations of a pure scene picture, every edited to use Cubism, Surrealism, and Fauvism types.

To check safety strategies on paintings, type switch was carried out on photos from the WikiArt dataset, which curates a variety of creative types. The modifying prompts adopted the identical format as earlier than, instructing the AI to alter the type to a randomly chosen, unrelated type drawn from the WikiArt labels.

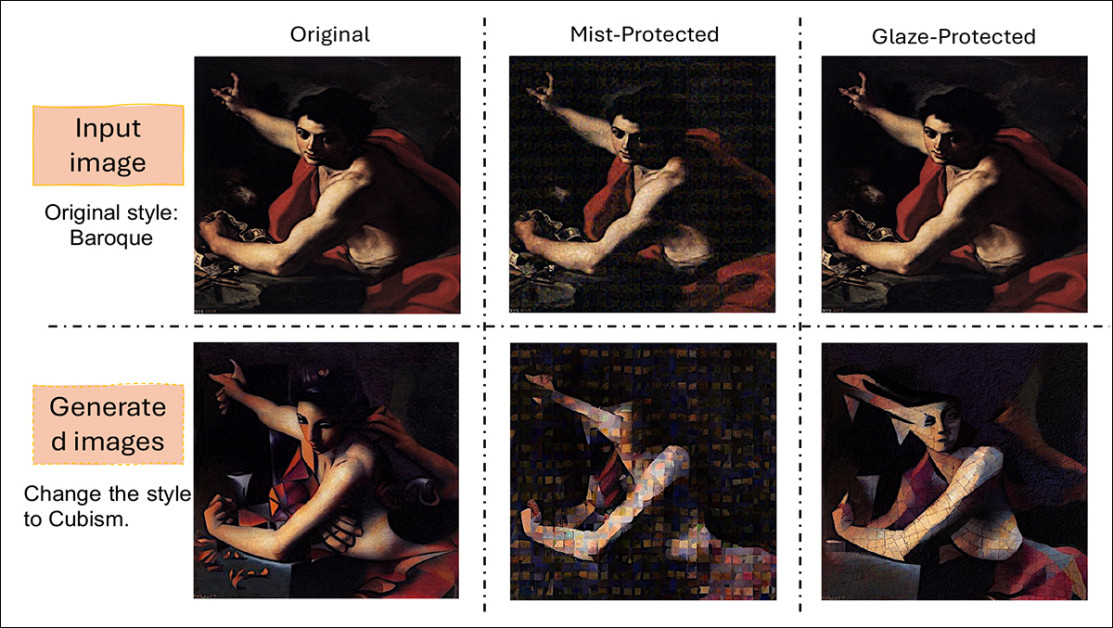

Each Glaze and Mist safety strategies had been utilized to the pictures earlier than the edits, permitting the researchers to look at how properly every protection may block or distort the type switch outcomes:

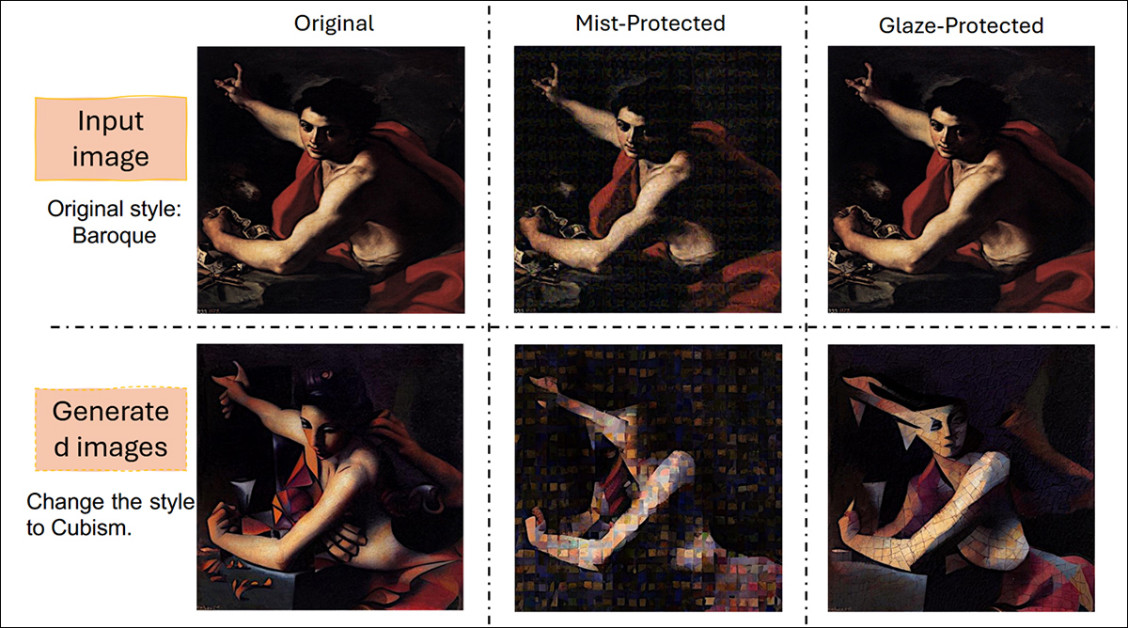

Examples of how safety strategies have an effect on type switch on paintings. The unique Baroque picture is proven alongside variations protected by Mist and Glaze. After making use of Cubism type switch, variations in how every safety alters the ultimate output might be seen.

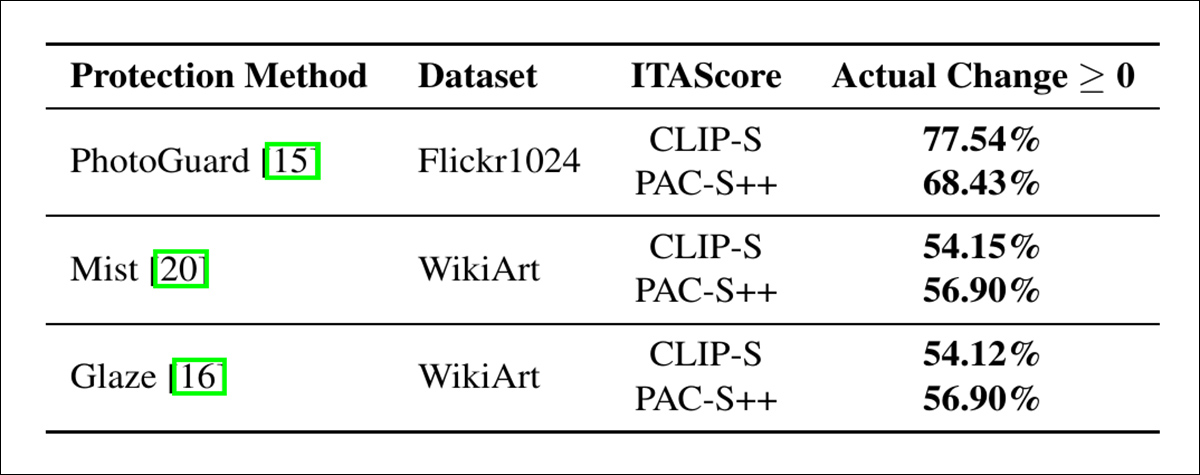

The researchers examined the comparisons quantitatively as properly:

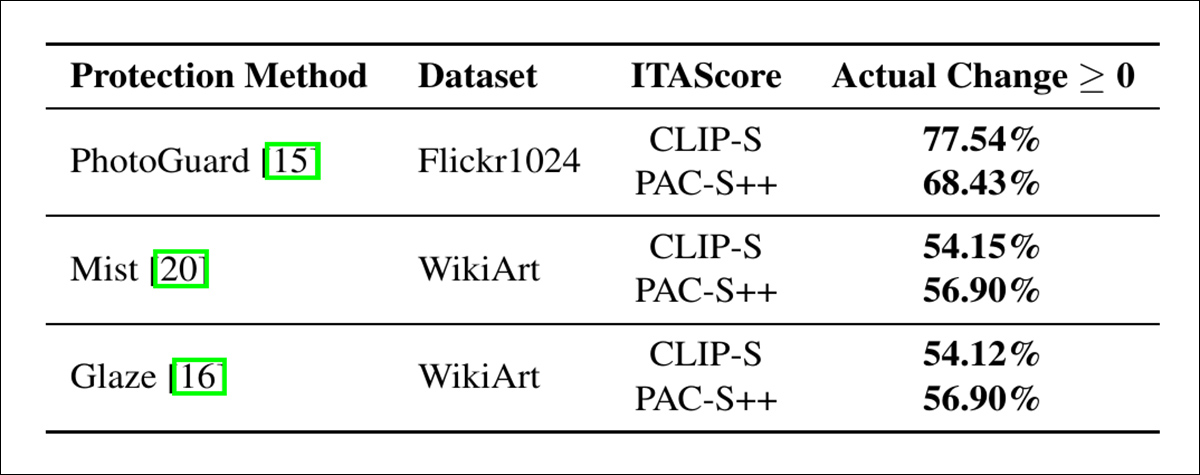

Modifications in image-text alignment scores after type switch edits.

Of those outcomes, the authors remark:

‘The outcomes spotlight a big limitation of adversarial perturbations for defense. As a substitute of impeding alignment, adversarial perturbations typically improve the generative mannequin’s responsiveness to prompts, inadvertently enabling exploiters to supply outputs that align extra intently with their aims. Such safety just isn’t disruptive to the picture modifying course of and will not be capable of stop malicious brokers from copying unauthorized materials.

‘The unintended penalties of utilizing adversarial perturbations reveal vulnerabilities in current strategies and underscore the pressing want for more practical safety methods.’

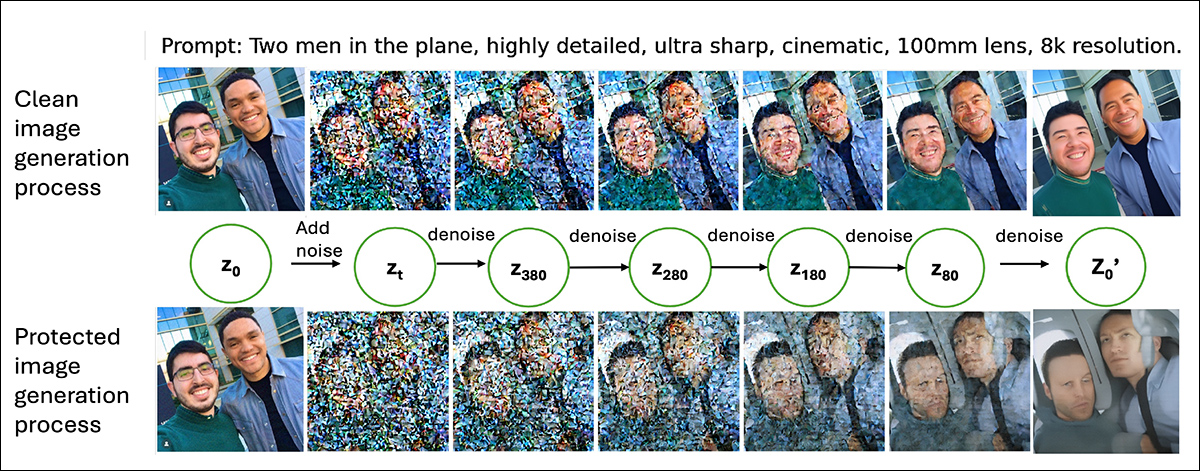

The authors clarify that the sudden outcomes might be traced to how diffusion fashions work: LDMs edit photos by first changing them right into a compressed model known as a latent; noise is then added to this latent via many steps, till the information turns into nearly random.

The mannequin reverses this course of throughout technology, eradicating the noise step-by-step. At every stage of this reversal, the textual content immediate helps information how the noise ought to be cleaned up, step by step shaping the picture to match the immediate:

Comparability between generations from an unprotected picture and a PhotoGuard-protected picture, with intermediate latent states transformed again into photos for visualization.

Safety strategies add small quantities of additional noise to the unique picture earlier than it enters this course of. Whereas these perturbations are minor at first, they accumulate because the mannequin applies its personal layers of noise.

This buildup leaves extra components of the picture ‘unsure’ when the mannequin begins eradicating noise. With better uncertainty, the mannequin leans extra closely on the textual content immediate to fill within the lacking particulars, giving the immediate much more affect than it might usually have.

In impact, the protections make it simpler for the AI to reshape the picture to match the immediate, relatively than tougher.

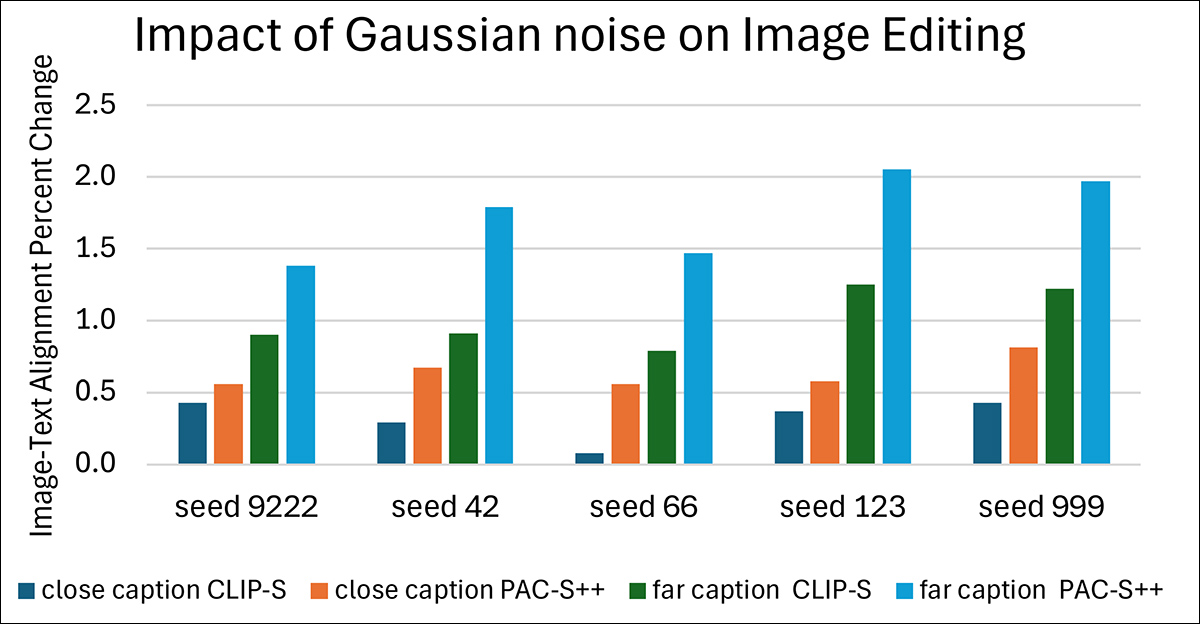

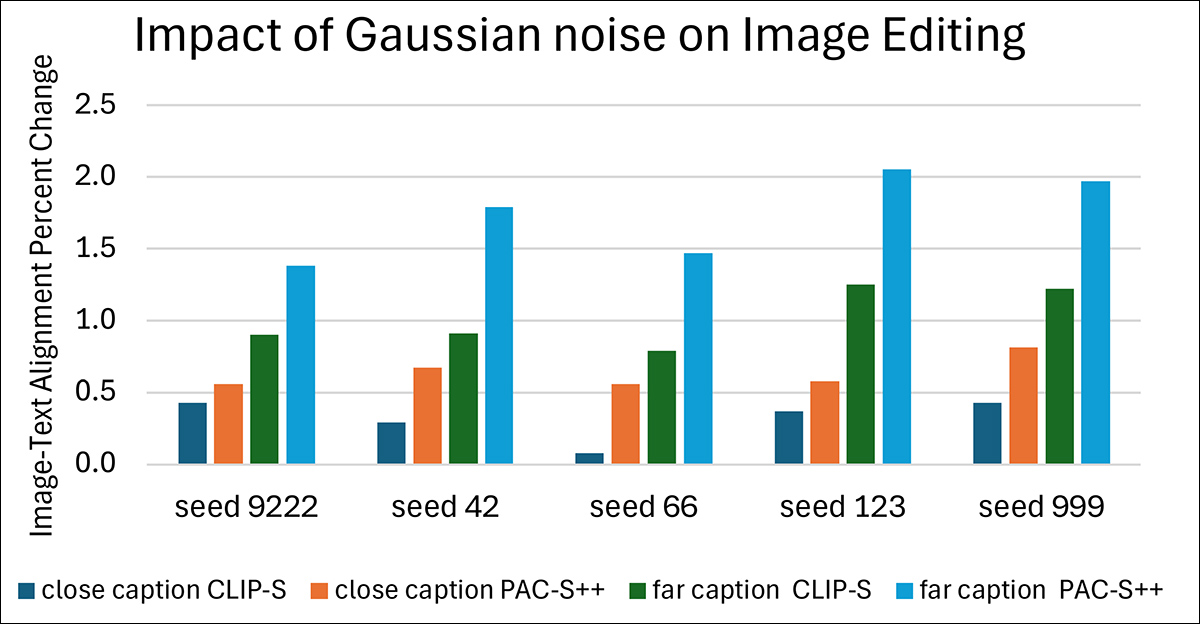

Lastly, the authors performed a take a look at that substituted crafted perturbations from the Elevating the Price of Malicious AI-Powered Picture Modifying paper for pure Gaussian noise.

The outcomes adopted the identical sample noticed earlier: throughout all exams, the Proportion Change values remained constructive. Even this random, unstructured noise led to stronger alignment between the generated photos and the prompts.

Impact of simulated safety utilizing Gaussian noise on the Flickr8k dataset.

This supported the underlying rationalization that any added noise, no matter its design, creates better uncertainty for the mannequin throughout technology, permitting the textual content immediate to exert much more management over the ultimate picture.

Conclusion

The analysis scene has been pushing adversarial perturbation on the LDM copyright situation for nearly so long as LDMs have been round; however no resilient options have emerged from the extraordinary variety of papers revealed on this tack.

Both the imposed disturbances excessively decrease the standard of the picture, or the patterns show to not be resilient to manipulation and transformative processes.

Nonetheless, it’s a onerous dream to desert, for the reason that different would appear to be third-party monitoring and provenance frameworks such because the Adobe-led C2PA scheme, which seeks to take care of a chain-of-custody for photos from the digital camera sensor on, however which has no innate reference to the content material depicted.

In any case, if adversarial perturbation is definitely making the issue worse, as the brand new paper signifies may very well be true in lots of circumstances, one wonders if the seek for copyright safety through such means falls underneath ‘alchemy’.

First revealed Monday, June 9, 2025

New analysis means that watermarking instruments meant to dam AI picture edits could backfire. As a substitute of stopping fashions like Steady Diffusion from making adjustments, some protections truly assist the AI observe modifying prompts extra intently, making undesirable manipulations even simpler.

There’s a notable and strong strand in pc imaginative and prescient literature devoted to defending copyrighted photos from being skilled into AI fashions, or being utilized in direct picture>picture AI processes. Methods of this type are typically geared toward Latent Diffusion Fashions (LDMs) reminiscent of Steady Diffusion and Flux, which use noise-based procedures to encode and decode imagery.

By inserting adversarial noise into in any other case normal-looking photos, it may be attainable to trigger picture detectors to guess picture content material incorrectly, and hobble image-generating techniques from exploiting copyrighted knowledge:

From the MIT paper ‘Elevating the Price of Malicious AI-Powered Picture Modifying’, examples of a supply picture ‘immunized’ towards manipulation (decrease row). Supply: https://arxiv.org/pdf/2302.06588

Since an artists’ backlash towards Steady Diffusion’s liberal use of web-scraped imagery (together with copyrighted imagery) in 2023, the analysis scene has produced a number of variations on the identical theme – the concept photos might be invisibly ‘poisoned’ towards being skilled into AI techniques or sucked into generative AI pipelines, with out adversely affecting the standard of the picture, for the common viewer.

In all circumstances, there’s a direct correlation between the depth of the imposed perturbation, the extent to which the picture is subsequently protected, and the extent to which the picture would not look fairly pretty much as good because it ought to:

Although the standard of the analysis PDF doesn’t fully illustrate the issue, better quantities of adversarial perturbation sacrifice high quality for safety. Right here we see the gamut of high quality disturbances within the 2020 ‘Fawkes’ challenge led by the College of Chicago. Supply: https://arxiv.org/pdf/2002.08327

Of explicit curiosity to artists searching for to guard their types towards unauthorized appropriation is the capability of such techniques not solely to obfuscate identification and different info, however to ‘persuade’ an AI coaching course of that it’s seeing one thing aside from it’s actually seeing, in order that connections don’t type between semantic and visible domains for ‘protected’ coaching knowledge (i.e., a immediate reminiscent of ‘Within the type of Paul Klee’).

Mist and Glaze are two in style injection strategies able to stopping, or at the very least severely hobbling makes an attempt to make use of copyrighted types in AI workflows and coaching routines. Supply: https://arxiv.org/pdf/2506.04394

Personal Purpose

Now, new analysis from the US has discovered not solely that perturbations can fail to guard a picture, however that including perturbation can truly enhance the picture’s exploitability in all of the AI processes that perturbation is supposed to immunize towards.

The paper states:

‘In our experiments with numerous perturbation-based picture safety strategies throughout a number of domains (pure scene photos and artworks) and modifying duties (image-to-image technology and magnificence modifying), we uncover that such safety doesn’t obtain this purpose fully.

‘In most situations, diffusion-based modifying of protected photos generates a fascinating output picture which adheres exactly to the steering immediate.

‘Our findings counsel that including noise to photographs could paradoxically improve their affiliation with given textual content prompts throughout the technology course of, resulting in unintended penalties reminiscent of higher resultant edits.

‘Therefore, we argue that perturbation-based strategies could not present a adequate answer for strong picture safety towards diffusion-based modifying.’

In exams, the protected photos had been uncovered to 2 acquainted AI modifying situations: simple image-to-image technology and type switch. These processes mirror the widespread ways in which AI fashions would possibly exploit protected content material, both by immediately altering a picture, or by borrowing its stylistic traits to be used elsewhere.

The protected photos, drawn from commonplace sources of images and paintings, had been run via these pipelines to see whether or not the added perturbations may block or degrade the edits.

As a substitute, the presence of safety typically appeared to sharpen the mannequin’s alignment with the prompts, producing clear, correct outputs the place some failure had been anticipated.

The authors advise, in impact, that this extremely popular methodology of safety could also be offering a false sense of safety, and that any such perturbation-based immunization approaches ought to be examined totally towards the authors’ personal strategies.

Methodology

The authors ran experiments utilizing three safety strategies that apply carefully-designed adversarial perturbations: PhotoGuard; Mist; and Glaze.

Glaze, one of many frameworks examined by the authors, illustrating Glaze safety examples for 3 artists. The primary two columns present the unique artworks; the third column exhibits mimicry outcomes with out safety; the fourth, style-transferred variations used for cloak optimization, together with the goal type identify. The fifth and sixth columns present mimicry outcomes with cloaking utilized at perturbation ranges p = 0.05 and p = 0.1. All outcomes use Steady Diffusion fashions. https://arxiv.org/pdf/2302.04222

PhotoGuard was utilized to pure scene photos, whereas Mist and Glaze had been used on artworks (i.e., ‘artistically-styled’ domains).

Checks coated each pure and creative photos to mirror attainable real-world makes use of. The effectiveness of every methodology was assessed by checking whether or not an AI mannequin may nonetheless produce lifelike and prompt-relevant edits when engaged on protected photos; if the ensuing photos appeared convincing and matched the prompts, the safety was judged to have failed.

Steady Diffusion v1.5 was used because the pre-trained picture generator for the researchers’ modifying duties. 5 seeds had been chosen to make sure reproducibility: 9222, 999, 123, 66, and 42. All different technology settings, reminiscent of steering scale, energy, and complete steps, adopted the default values used within the PhotoGuard experiments.

PhotoGuard was examined on pure scene photos utilizing the Flickr8k dataset, which incorporates over 8,000 photos paired with as much as 5 captions every.

Opposing Ideas

Two units of modified captions had been created from the primary caption of every picture with the assistance of Claude Sonnet 3.5. One set contained prompts that had been contextually shut to the unique captions; the opposite set contained prompts that had been contextually distant.

For instance, from the unique caption ‘A younger lady in a pink gown going right into a picket cabin’, a detailed immediate can be ‘A younger boy in a blue shirt going right into a brick home’. In contrast, a distant immediate can be ‘Two cats lounging on a sofa’.

Shut prompts had been constructed by changing nouns and adjectives with semantically comparable phrases; far prompts had been generated by instructing the mannequin to create captions that had been contextually very totally different.

All generated captions had been manually checked for high quality and semantic relevance. Google’s Common Sentence Encoder was used to calculate semantic similarity scores between the unique and modified captions:

From the supplementary materials, semantic similarity distributions for the modified captions utilized in Flickr8k exams. The graph on the left exhibits the similarity scores for intently modified captions, averaging round 0.6. The graph on the suitable exhibits the extensively modified captions, averaging round 0.1, reflecting better semantic distance from the unique captions. Values had been calculated utilizing Google’s Common Sentence Encoder. Supply: https://sigport.org/websites/default/information/docs/IncompleteProtection_SM_0.pdf

Every picture, together with its protected model, was edited utilizing each the shut and much prompts. The Blind/Referenceless Picture Spatial High quality Evaluator (BRISQUE) was used to evaluate picture high quality:

Picture-to-image technology outcomes on pure images protected by PhotoGuard. Regardless of the presence of perturbations, Steady Diffusion v1.5 efficiently adopted each small and enormous semantic adjustments within the modifying prompts, producing lifelike outputs that matched the brand new directions.

The generated photos scored 17.88 on BRISQUE, with 17.82 for shut prompts and 17.94 for much prompts, whereas the unique photos scored 22.27. This exhibits that the edited photos remained shut in high quality to the originals.

Metrics

To guage how properly the protections interfered with AI modifying, the researchers measured how intently the ultimate photos matched the directions they got, utilizing scoring techniques that in contrast the picture content material to the textual content immediate, to see how properly they align.

To this finish, the CLIP-S metric makes use of a mannequin that may perceive each photos and textual content to examine how comparable they’re, whereas PAC-S++, provides additional samples created by AI to align its comparability extra intently to a human estimation.

These Picture-Textual content Alignment (ITA) scores denote how precisely the AI adopted the directions when modifying a protected picture: if a protected picture nonetheless led to a extremely aligned output, it means the safety was deemed to have failed to dam the edit.

Impact of safety on the Flickr8k dataset throughout 5 seeds, utilizing each shut and distant prompts. Picture-text alignment was measured utilizing CLIP-S and PAC-S++ scores.

The researchers in contrast how properly the AI adopted prompts when modifying protected photos versus unprotected ones. They first regarded on the distinction between the 2, known as the Precise Change. Then the distinction was scaled to create a Proportion Change, making it simpler to check outcomes throughout many exams.

This course of revealed whether or not the protections made it tougher or simpler for the AI to match the prompts. The exams had been repeated 5 occasions utilizing totally different random seeds, masking each small and enormous adjustments to the unique captions.

Artwork Assault

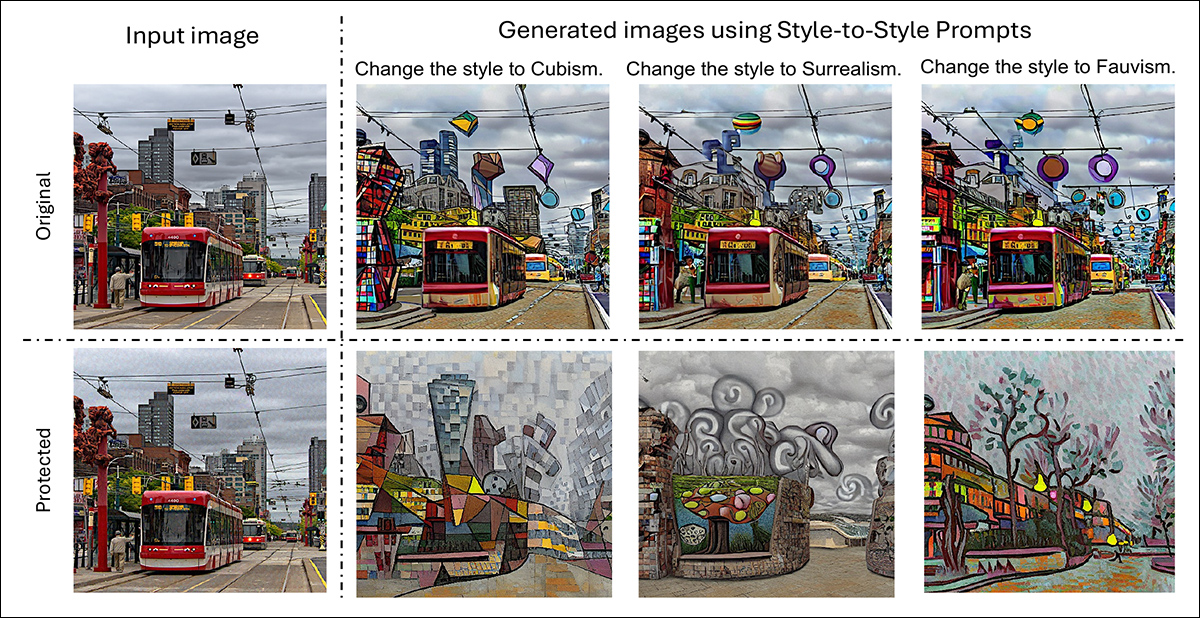

For the exams on pure images, the Flickr1024 dataset was used, containing over one thousand high-quality photos. Every picture was edited with prompts that adopted the sample: ‘change the type to [V]’, the place [V] represented one in every of seven well-known artwork types: Cubism; Put up-Impressionism; Impressionism; Surrealism; Baroque; Fauvism; and Renaissance.

The method concerned making use of PhotoGuard to the unique photos, producing protected variations, after which operating each protected and unprotected photos via the identical set of fashion switch edits:

Authentic and guarded variations of a pure scene picture, every edited to use Cubism, Surrealism, and Fauvism types.

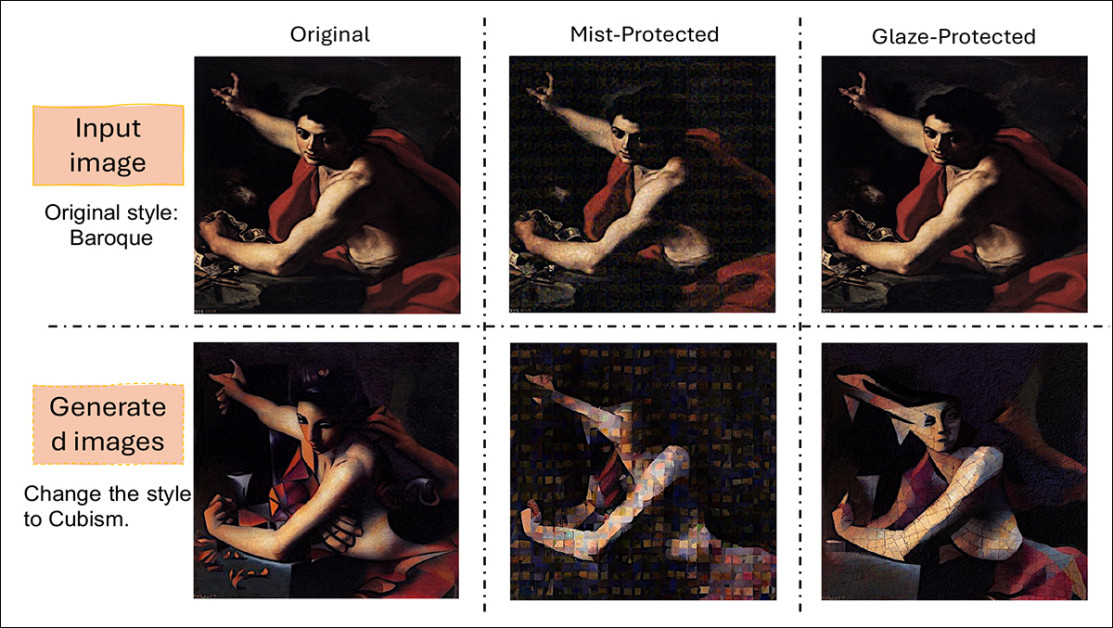

To check safety strategies on paintings, type switch was carried out on photos from the WikiArt dataset, which curates a variety of creative types. The modifying prompts adopted the identical format as earlier than, instructing the AI to alter the type to a randomly chosen, unrelated type drawn from the WikiArt labels.

Each Glaze and Mist safety strategies had been utilized to the pictures earlier than the edits, permitting the researchers to look at how properly every protection may block or distort the type switch outcomes:

Examples of how safety strategies have an effect on type switch on paintings. The unique Baroque picture is proven alongside variations protected by Mist and Glaze. After making use of Cubism type switch, variations in how every safety alters the ultimate output might be seen.

The researchers examined the comparisons quantitatively as properly:

Modifications in image-text alignment scores after type switch edits.

Of those outcomes, the authors remark:

‘The outcomes spotlight a big limitation of adversarial perturbations for defense. As a substitute of impeding alignment, adversarial perturbations typically improve the generative mannequin’s responsiveness to prompts, inadvertently enabling exploiters to supply outputs that align extra intently with their aims. Such safety just isn’t disruptive to the picture modifying course of and will not be capable of stop malicious brokers from copying unauthorized materials.

‘The unintended penalties of utilizing adversarial perturbations reveal vulnerabilities in current strategies and underscore the pressing want for more practical safety methods.’

The authors clarify that the sudden outcomes might be traced to how diffusion fashions work: LDMs edit photos by first changing them right into a compressed model known as a latent; noise is then added to this latent via many steps, till the information turns into nearly random.

The mannequin reverses this course of throughout technology, eradicating the noise step-by-step. At every stage of this reversal, the textual content immediate helps information how the noise ought to be cleaned up, step by step shaping the picture to match the immediate:

Comparability between generations from an unprotected picture and a PhotoGuard-protected picture, with intermediate latent states transformed again into photos for visualization.

Safety strategies add small quantities of additional noise to the unique picture earlier than it enters this course of. Whereas these perturbations are minor at first, they accumulate because the mannequin applies its personal layers of noise.

This buildup leaves extra components of the picture ‘unsure’ when the mannequin begins eradicating noise. With better uncertainty, the mannequin leans extra closely on the textual content immediate to fill within the lacking particulars, giving the immediate much more affect than it might usually have.

In impact, the protections make it simpler for the AI to reshape the picture to match the immediate, relatively than tougher.

Lastly, the authors performed a take a look at that substituted crafted perturbations from the Elevating the Price of Malicious AI-Powered Picture Modifying paper for pure Gaussian noise.

The outcomes adopted the identical sample noticed earlier: throughout all exams, the Proportion Change values remained constructive. Even this random, unstructured noise led to stronger alignment between the generated photos and the prompts.

Impact of simulated safety utilizing Gaussian noise on the Flickr8k dataset.

This supported the underlying rationalization that any added noise, no matter its design, creates better uncertainty for the mannequin throughout technology, permitting the textual content immediate to exert much more management over the ultimate picture.

Conclusion

The analysis scene has been pushing adversarial perturbation on the LDM copyright situation for nearly so long as LDMs have been round; however no resilient options have emerged from the extraordinary variety of papers revealed on this tack.

Both the imposed disturbances excessively decrease the standard of the picture, or the patterns show to not be resilient to manipulation and transformative processes.

Nonetheless, it’s a onerous dream to desert, for the reason that different would appear to be third-party monitoring and provenance frameworks such because the Adobe-led C2PA scheme, which seeks to take care of a chain-of-custody for photos from the digital camera sensor on, however which has no innate reference to the content material depicted.

In any case, if adversarial perturbation is definitely making the issue worse, as the brand new paper signifies may very well be true in lots of circumstances, one wonders if the seek for copyright safety through such means falls underneath ‘alchemy’.

First revealed Monday, June 9, 2025