Once you’re making an attempt to speak or perceive concepts, phrases don’t at all times do the trick. Generally the extra environment friendly strategy is to do a easy sketch of that idea — for instance, diagramming a circuit would possibly assist make sense of how the system works.

However what if synthetic intelligence might assist us discover these visualizations? Whereas these programs are sometimes proficient at creating life like work and cartoonish drawings, many fashions fail to seize the essence of sketching: its stroke-by-stroke, iterative course of, which helps people brainstorm and edit how they wish to signify their concepts.

A brand new drawing system from MIT’s Laptop Science and Synthetic Intelligence Laboratory (CSAIL) and Stanford College can sketch extra like we do. Their methodology, referred to as “SketchAgent,” makes use of a multimodal language mannequin — AI programs that prepare on textual content and pictures, like Anthropic’s Claude 3.5 Sonnet — to show pure language prompts into sketches in a number of seconds. For instance, it might probably doodle a home both by itself or by means of collaboration, drawing with a human or incorporating text-based enter to sketch every half individually.

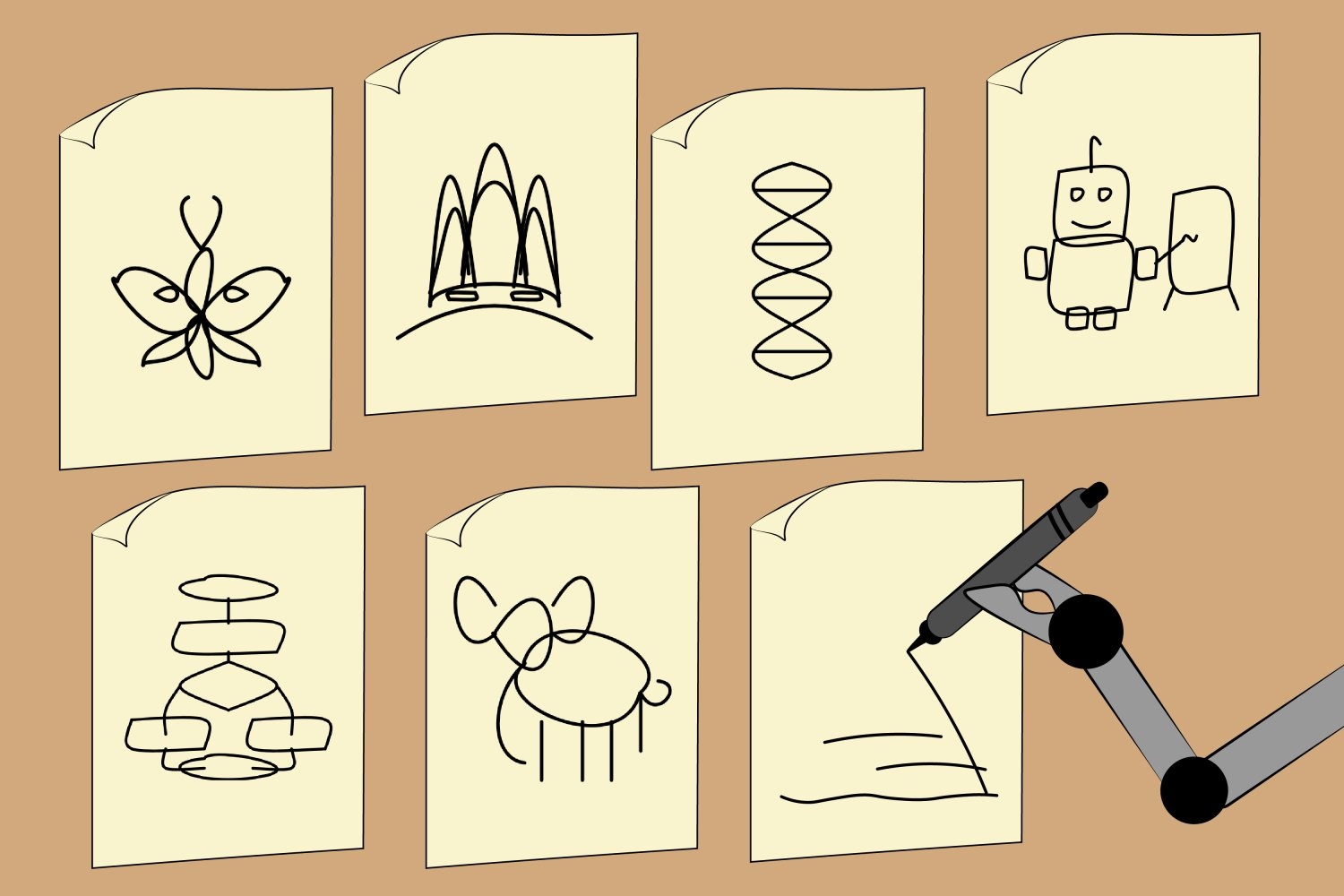

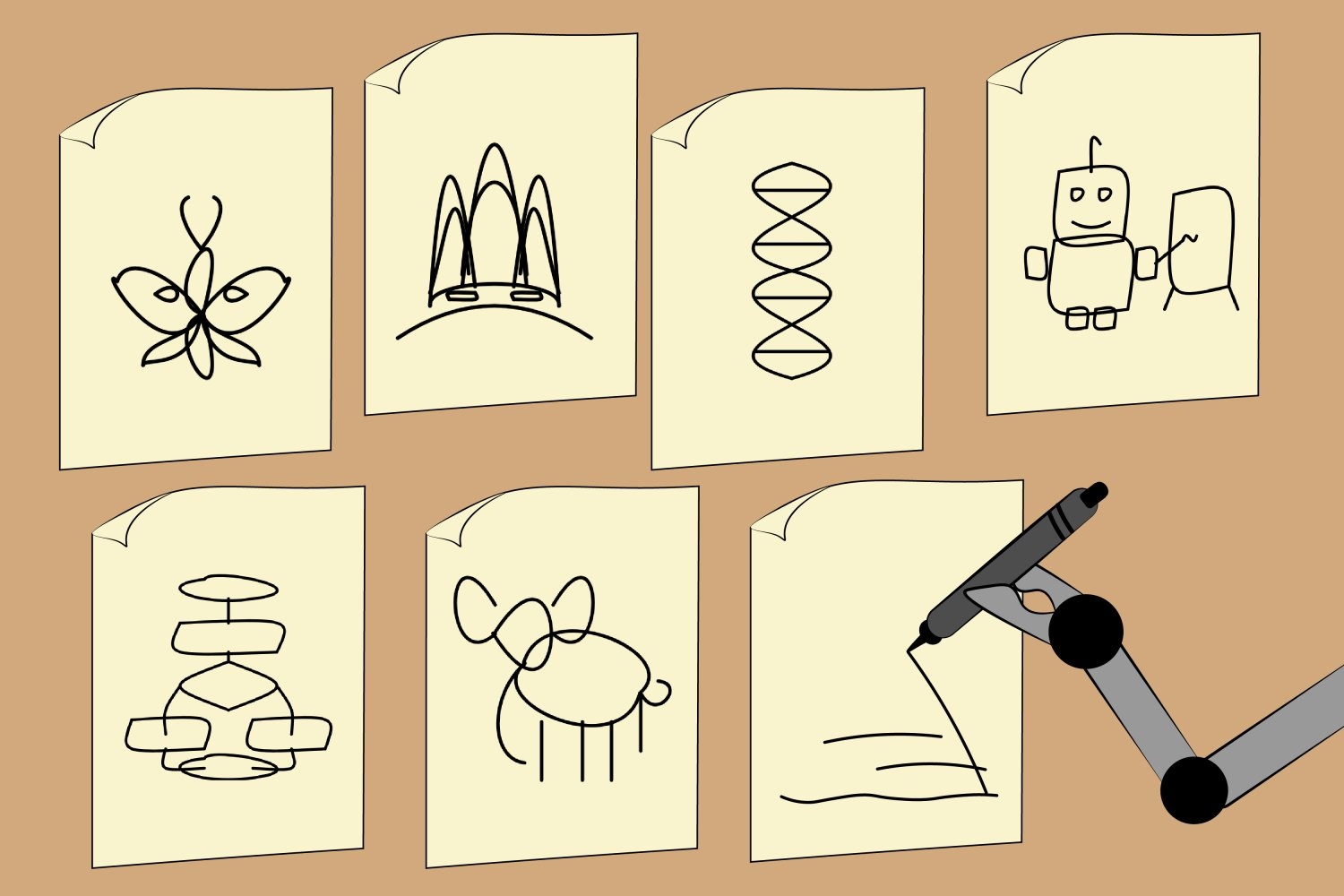

The researchers confirmed that SketchAgent can create summary drawings of numerous ideas, like a robotic, butterfly, DNA helix, flowchart, and even the Sydney Opera Home. Someday, the instrument may very well be expanded into an interactive artwork recreation that helps academics and researchers diagram advanced ideas or give customers a fast drawing lesson.

CSAIL postdoc Yael Vinker, who’s the lead writer of a paper introducing SketchAgent, notes that the system introduces a extra pure means for people to speak with AI.

“Not everyone seems to be conscious of how a lot they draw of their each day life. We could draw our ideas or workshop concepts with sketches,” she says. “Our instrument goals to emulate that course of, making multimodal language fashions extra helpful in serving to us visually specific concepts.”

SketchAgent teaches these fashions to attract stroke-by-stroke with out coaching on any knowledge — as an alternative, the researchers developed a “sketching language” wherein a sketch is translated right into a numbered sequence of strokes on a grid. The system was given an instance of how issues like a home can be drawn, with every stroke labeled in response to what it represented — such because the seventh stroke being a rectangle labeled as a “entrance door” — to assist the mannequin generalize to new ideas.

Vinker wrote the paper alongside three CSAIL associates — postdoc Tamar Rott Shaham, undergraduate researcher Alex Zhao, and MIT Professor Antonio Torralba — in addition to Stanford College Analysis Fellow Kristine Zheng and Assistant Professor Judith Ellen Fan. They’ll current their work on the 2025 Convention on Laptop Imaginative and prescient and Sample Recognition (CVPR) this month.

Assessing AI’s sketching skills

Whereas text-to-image fashions comparable to DALL-E 3 can create intriguing drawings, they lack a vital part of sketching: the spontaneous, artistic course of the place every stroke can impression the general design. However, SketchAgent’s drawings are modeled as a sequence of strokes, showing extra pure and fluid, like human sketches.

Prior works have mimicked this course of, too, however they skilled their fashions on human-drawn datasets, which are sometimes restricted in scale and variety. SketchAgent makes use of pre-trained language fashions as an alternative, that are educated about many ideas, however don’t know methods to sketch. When the researchers taught language fashions this course of, SketchAgent started to sketch numerous ideas it hadn’t explicitly skilled on.

Nonetheless, Vinker and her colleagues wished to see if SketchAgent was actively working with people on the sketching course of, or if it was working independently of its drawing associate. The workforce examined their system in collaboration mode, the place a human and a language mannequin work towards drawing a specific idea in tandem. Eradicating SketchAgent’s contributions revealed that their instrument’s strokes have been important to the ultimate drawing. In a drawing of a sailboat, as an illustration, eradicating the factitious strokes representing a mast made the general sketch unrecognizable.

In one other experiment, CSAIL and Stanford researchers plugged completely different multimodal language fashions into SketchAgent to see which might create probably the most recognizable sketches. Their default spine mannequin, Claude 3.5 Sonnet, generated probably the most human-like vector graphics (primarily text-based information that may be transformed into high-resolution photos). It outperformed fashions like GPT-4o and Claude 3 Opus.

“The truth that Claude 3.5 Sonnet outperformed different fashions like GPT-4o and Claude 3 Opus means that this mannequin processes and generates visual-related info otherwise,” says co-author Tamar Rott Shaham.

She provides that SketchAgent might grow to be a useful interface for collaborating with AI fashions past commonplace, text-based communication. “As fashions advance in understanding and producing different modalities, like sketches, they open up new methods for customers to precise concepts and obtain responses that really feel extra intuitive and human-like,” says Rott Shaham. “This might considerably enrich interactions, making AI extra accessible and versatile.”

Whereas SketchAgent’s drawing prowess is promising, it might probably’t make skilled sketches but. It renders easy representations of ideas utilizing stick figures and doodles, however struggles to doodle issues like logos, sentences, advanced creatures like unicorns and cows, and particular human figures.

At instances, their mannequin additionally misunderstood customers’ intentions in collaborative drawings, like when SketchAgent drew a bunny with two heads. Based on Vinker, this can be as a result of the mannequin breaks down every process into smaller steps (additionally referred to as “Chain of Thought” reasoning). When working with people, the mannequin creates a drawing plan, doubtlessly misinterpreting which a part of that define a human is contributing to. The researchers might presumably refine these drawing expertise by coaching on artificial knowledge from diffusion fashions.

Moreover, SketchAgent typically requires a number of rounds of prompting to generate human-like doodles. Sooner or later, the workforce goals to make it simpler to work together and sketch with multimodal language fashions, together with refining their interface.

Nonetheless, the instrument suggests AI might draw numerous ideas the way in which people do, with step-by-step human-AI collaboration that leads to extra aligned closing designs.

This work was supported, partly, by the U.S. Nationwide Science Basis, a Hoffman-Yee Grant from the Stanford Institute for Human-Centered AI, the Hyundai Motor Co., the U.S. Military Analysis Laboratory, the Zuckerman STEM Management Program, and a Viterbi Fellowship.

Once you’re making an attempt to speak or perceive concepts, phrases don’t at all times do the trick. Generally the extra environment friendly strategy is to do a easy sketch of that idea — for instance, diagramming a circuit would possibly assist make sense of how the system works.

However what if synthetic intelligence might assist us discover these visualizations? Whereas these programs are sometimes proficient at creating life like work and cartoonish drawings, many fashions fail to seize the essence of sketching: its stroke-by-stroke, iterative course of, which helps people brainstorm and edit how they wish to signify their concepts.

A brand new drawing system from MIT’s Laptop Science and Synthetic Intelligence Laboratory (CSAIL) and Stanford College can sketch extra like we do. Their methodology, referred to as “SketchAgent,” makes use of a multimodal language mannequin — AI programs that prepare on textual content and pictures, like Anthropic’s Claude 3.5 Sonnet — to show pure language prompts into sketches in a number of seconds. For instance, it might probably doodle a home both by itself or by means of collaboration, drawing with a human or incorporating text-based enter to sketch every half individually.

The researchers confirmed that SketchAgent can create summary drawings of numerous ideas, like a robotic, butterfly, DNA helix, flowchart, and even the Sydney Opera Home. Someday, the instrument may very well be expanded into an interactive artwork recreation that helps academics and researchers diagram advanced ideas or give customers a fast drawing lesson.

CSAIL postdoc Yael Vinker, who’s the lead writer of a paper introducing SketchAgent, notes that the system introduces a extra pure means for people to speak with AI.

“Not everyone seems to be conscious of how a lot they draw of their each day life. We could draw our ideas or workshop concepts with sketches,” she says. “Our instrument goals to emulate that course of, making multimodal language fashions extra helpful in serving to us visually specific concepts.”

SketchAgent teaches these fashions to attract stroke-by-stroke with out coaching on any knowledge — as an alternative, the researchers developed a “sketching language” wherein a sketch is translated right into a numbered sequence of strokes on a grid. The system was given an instance of how issues like a home can be drawn, with every stroke labeled in response to what it represented — such because the seventh stroke being a rectangle labeled as a “entrance door” — to assist the mannequin generalize to new ideas.

Vinker wrote the paper alongside three CSAIL associates — postdoc Tamar Rott Shaham, undergraduate researcher Alex Zhao, and MIT Professor Antonio Torralba — in addition to Stanford College Analysis Fellow Kristine Zheng and Assistant Professor Judith Ellen Fan. They’ll current their work on the 2025 Convention on Laptop Imaginative and prescient and Sample Recognition (CVPR) this month.

Assessing AI’s sketching skills

Whereas text-to-image fashions comparable to DALL-E 3 can create intriguing drawings, they lack a vital part of sketching: the spontaneous, artistic course of the place every stroke can impression the general design. However, SketchAgent’s drawings are modeled as a sequence of strokes, showing extra pure and fluid, like human sketches.

Prior works have mimicked this course of, too, however they skilled their fashions on human-drawn datasets, which are sometimes restricted in scale and variety. SketchAgent makes use of pre-trained language fashions as an alternative, that are educated about many ideas, however don’t know methods to sketch. When the researchers taught language fashions this course of, SketchAgent started to sketch numerous ideas it hadn’t explicitly skilled on.

Nonetheless, Vinker and her colleagues wished to see if SketchAgent was actively working with people on the sketching course of, or if it was working independently of its drawing associate. The workforce examined their system in collaboration mode, the place a human and a language mannequin work towards drawing a specific idea in tandem. Eradicating SketchAgent’s contributions revealed that their instrument’s strokes have been important to the ultimate drawing. In a drawing of a sailboat, as an illustration, eradicating the factitious strokes representing a mast made the general sketch unrecognizable.

In one other experiment, CSAIL and Stanford researchers plugged completely different multimodal language fashions into SketchAgent to see which might create probably the most recognizable sketches. Their default spine mannequin, Claude 3.5 Sonnet, generated probably the most human-like vector graphics (primarily text-based information that may be transformed into high-resolution photos). It outperformed fashions like GPT-4o and Claude 3 Opus.

“The truth that Claude 3.5 Sonnet outperformed different fashions like GPT-4o and Claude 3 Opus means that this mannequin processes and generates visual-related info otherwise,” says co-author Tamar Rott Shaham.

She provides that SketchAgent might grow to be a useful interface for collaborating with AI fashions past commonplace, text-based communication. “As fashions advance in understanding and producing different modalities, like sketches, they open up new methods for customers to precise concepts and obtain responses that really feel extra intuitive and human-like,” says Rott Shaham. “This might considerably enrich interactions, making AI extra accessible and versatile.”

Whereas SketchAgent’s drawing prowess is promising, it might probably’t make skilled sketches but. It renders easy representations of ideas utilizing stick figures and doodles, however struggles to doodle issues like logos, sentences, advanced creatures like unicorns and cows, and particular human figures.

At instances, their mannequin additionally misunderstood customers’ intentions in collaborative drawings, like when SketchAgent drew a bunny with two heads. Based on Vinker, this can be as a result of the mannequin breaks down every process into smaller steps (additionally referred to as “Chain of Thought” reasoning). When working with people, the mannequin creates a drawing plan, doubtlessly misinterpreting which a part of that define a human is contributing to. The researchers might presumably refine these drawing expertise by coaching on artificial knowledge from diffusion fashions.

Moreover, SketchAgent typically requires a number of rounds of prompting to generate human-like doodles. Sooner or later, the workforce goals to make it simpler to work together and sketch with multimodal language fashions, together with refining their interface.

Nonetheless, the instrument suggests AI might draw numerous ideas the way in which people do, with step-by-step human-AI collaboration that leads to extra aligned closing designs.

This work was supported, partly, by the U.S. Nationwide Science Basis, a Hoffman-Yee Grant from the Stanford Institute for Human-Centered AI, the Hyundai Motor Co., the U.S. Military Analysis Laboratory, the Zuckerman STEM Management Program, and a Viterbi Fellowship.